Implications for Corporate Oversight of Cybersecurity

How AI Will Impact Cybersecurity Regulatory and Disclosure Matters

Human Impact & Corporate Alignment

Recognizing that AI is fundamentally a human endeavor is crucial and imperative to successful implementation of AI technology. AI models often lack transparency. Black-box algorithms make it challenging to understand decision-making processes. As AI can inherit biases from training data, governance models should be reviewed to ensure equitable treatment and frequent tuning of the models to ensure that they are operating within expected risk tolerances.

Approaching AI from the perspective of a company’s mission and values aligns strategic decisions. Responsibility for AI oversight can reside with the full board, existing committees (e.g., audit or technology), or dedicated AI committees.

Regulatory Impact

Traditional regulatory models struggle to keep pace with rapidly evolving technology, and national legislation complicates this issue. The current state of AI regulation is a patchwork of mandatory and voluntary AI frameworks.

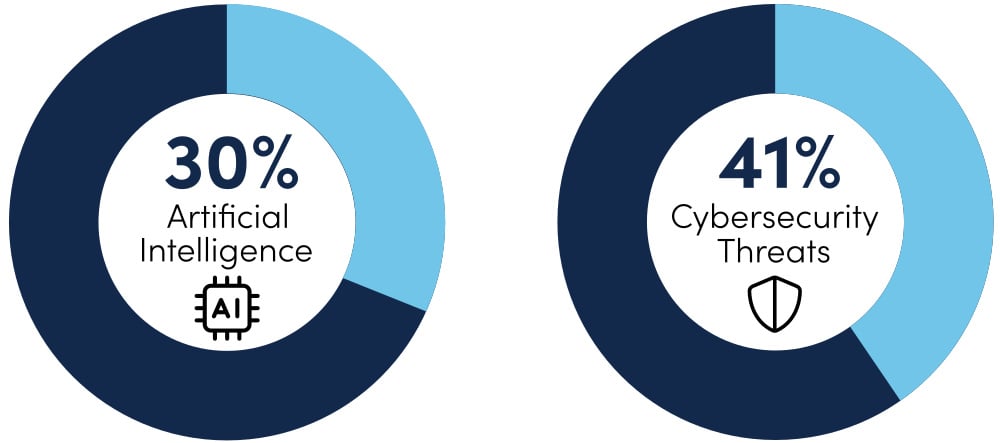

According to NACD’s 2025 Trends and Priorities Survey data, almost one-third (30%) of corporate directors believe that artificial intelligence will be a top priority for their business in 2025, with another 41 percent of directors selecting cybersecurity threats as a top trend. There are many facets to this assessment, but inherent in this conclusion is the evaluation that AI raises the general risk posture of any entity employing these new technologies. As such, the management of that risk becomes an important factor of which boards need to be aware. In addition to the operational and security challenges incurred by an enterprise with the implementation of rapidly evolving AI systems, an essential factor to consider when evaluating the potential impact of AI is the increasingly complicated regulatory and compliance risk that accompanies such a transformation.

Cybersecurity & AI as Top Trends for Directors in 2025

Source: 2025 NACD Trends and Priorities Survey, n=251

Regulatory and compliance risks are compounded by the fact that there is limited widespread AI expertise and the AI regulations that do exist are “nascent and highly fragmented.” A Swimlane and Sapio Research survey of 500 cybersecurity decision-makers at companies found that 44 percent of them said that it’s a challenge to find and retain the personnel that have AI expertise. Similarly, an NACD survey found that only 28 percent of board respondents have AI as a regular feature in board conversations.

Cybersecurity regulation is still a challenge for companies of all shapes and sizes, with only 40 percent of cybersecurity decision-makers believing that their organizations “have made the necessary investments to fully comply with relevant cybersecurity regulations, while 19% admit to having done very little.” The addition of artificial intelligence adds a new, more intricate layer of regulatory/compliance risks that boards will have to consider.

A recent case study from EY, found that “regulators often take a wait-and-see approach to nascent technology, with guidance trailing innovation by three to five years.” In regard to compliance, “historically, compliance professionals have treated technological innovation with skepticism.” Nonetheless, as with the rapid growth of cybersecurity regulation globally over the last few years, when the regulation does come, it comes fast and furiously. We are beginning to see signs that the new paradigm is shortening the three-to-five-year window referenced above. At their peril, many companies adopting a similar “wait and see” approach regarding AI regulation will find themselves overwhelmed as they struggle to effect compliance with limited resources and short timelines. Boards need to anticipate and understand that as AI advances occur at an increasingly rapid rate, the difficulty that companies will experience in keeping pace with emerging regulation and understanding its effect on business will be compounded. This will inadvertently bring about additional regulatory and compliance risks around AI deployment.

As such, “new AI technologies will force compliance professionals to rethink existing operational models and approaches to risk management.” A more proactive approach, on the part of both government and deployers of AI technologies, in crafting sensible regulation may act as a positive force in the smooth incorporation of AI into business functions. As noted by NACD, fulfilling the compliance responsibility “for AI regulation will be challenging, but regulations may become a lever to ensure that companies are engaging with AI systems safely and responsibly.”

The vast majority of pending legislation, both domestically and internationally, call for a ranking of risk typically organized by prohibited risk, high risk, minimal risk, and low risk.

AI Seven-Step Governance Program

Currently, virtually all the evolving regulatory structures are tending to suggest that at least high-risk AI use cases should follow a seven-step governance program embodied in current EU regulatory structures:

- Confirm High Quality Data Use: “High-quality data” as a term generally means data being used for high-risk AI is material and relevant to the exercise.

- Continuous Monitoring: Ensure there is continuous monitoring, testing, and auditing pre- and post-deployment of the high-risk use of AI.

- Risk Assessment: Perform risk assessments based on the pre-deployment testing, auditing, and monitoring of the AI. This will require close communication with the enterprise AI management team to ensure that the required processes are in place.

- Technical Documentation: Ensure that all required technical documentation and risk mitigation have been implemented based on the continuous monitoring process—all users, licensees, and deployers of AI must do their own testing.

- Transparency: Licensors and licensees of AI will be expected to be fully transparent with end users as to the capabilities and limitations of the AI.

- Human Oversight: Trusted AI legal frameworks intend for there to be a degree of human oversight to correct deviations from expected uses in real time. This may require a human research scientist within the company who would have the ability to adjust the AI model to bring it back into safety parameters.

- Fail-Safe: In the event that AI cannot be restored to approved parameters, there would need to be a fail-safe “kill switch” if remedial mitigation steps cannot be effectuated.

Source: National Association of Corporat Directors

As is the case with the model for cybersecurity advocated in the NACD-ISA Director’s Handbook on Cyber-Risk Oversight, AI security should not be “bolted-on” at the end of the process. Rather, AI systems, like cybersecurity, are best integrated through the full life cycle of development. Most of these steps listed above are relatively low-cost at the outset, and boards should assure they are in place early in the process, as it is better to build the company’s AI in accordance of regulator expectations from the outset rather than investing in AI use cases that may eventually be deemed noncompliant.

Ultimately, the internal use of AI in the conduct of the company’s business or embedding AI into the company’s products and services needs to be meticulously governed. Customers and shareholders will want to have confidence and trust that the company’s use of AI is being done in a manner that will accelerate growth in shareholder equity without the deep risks of regulatory or quality harms that may come from a company that is not using AI responsibly.

A governance and engineering framework is desired to ensure that all components of AI use occur through a human-centered AI approach. The AI Act is the approach for trustworthy AI use in Europe as described above. There are also a variety of voluntary frameworks to help guide responsible and trustworthy development and use of AI, including the National Institutes of Standards and Technology’s (NIST) AI Risk Management Framework, the Government Accountably Office’s (GAO) AI Accountability Framework, and the OECD’s Principles on Artificial Intelligence. There are also private-sector AI frameworks that address specific industries, cybersecurity concerns, system safety, tool acquisition, and frameworks for rapidly-accelerating agentic systems.

Boards should stay informed about emerging legislation and regulation and adapt quickly should regulatory frameworks evolve. Although some aspects of AI remain unregulated, organizations must create their own guidelines and safeguards to maintain trust with their customers, shareholders, and partners.

Disclosure Imperatives

Adoption of and compliance with the above mentioned, and emerging, laws and frameworks brings various implications for corporate disclosures. The following categories provide a reference for boards and directors of companies deploying, developing, selling, or using AI tools to consider how their company’s use of AI may impact their disclosure obligations.

Transparency and Accountability

Organizations deploying AI in cybersecurity can leverage the following best practices to disclose their use of AI-driven tools. This transparency ensures that stakeholders, including customers and investors, understand the technology’s role in security operations. Regulators should also play a crucial role by mandating transparency regarding AI models, training data, and decision-making processes. By doing so, organizations can build trust and demonstrate their commitment to ethical practices.

Risk Assessment and Mitigation

When adopting AI, organizations must conduct thorough risk assessments. These assessments should consider both the benefits and limitations of AI in enhancing security. A detailed model for modern cyber-risk assessment is provided under Principles 4 and 5 in the 2023 Director’s Handbook on Cyber-Risk Oversight. Grafting in AI-specific use cases and requirements, as noted above, in the EU Framework and others will help ensure that AI-specific risks are identified and addressed early on in the acquisition life cycle. By disclosing these assessments, organizations can inform stakeholders about potential risks and how they plan to mitigate them. Effective communication around risk management ensures that AI adoption aligns with overall security objectives.

Incident Reporting and AI Failures

Prompt incident reporting is essential when AI-related incidents occur. Organizations should disclose any failures or security breaches promptly. Regulators need mechanisms to track these incidents and assess their impact on overall security. Transparency in reporting ensures that corrective actions can be taken promptly, minimizing harm and maintaining trust. Having human-centered AI principles built into your AI strategy and operations helps to ensure that harms from potential unreliable AI results are quickly addressable.

Imperative for Boards

Corporate oversight of AI in cybersecurity requires a holistic approach that balances strategic opportunities, risk management, and ethical considerations. By recognizing the human dimension of AI and staying informed about regulation, boards can effectively navigate this transformative landscape.