Implications for Corporate Oversight of Cybersecurity

AI Friend and Foe

Artificial intelligence (AI) has already significantly impacted business, with greater impacts for efficiency and productivity predicted as AI quickly becomes more widely integrated. In truth, with business adoption of AI reaching 72 percent in 2024, it already has. Overall, it’s estimated that AI will contribute a 21 percent net increase to the United States GDP by 2030. As more companies and consumers adopt AI in their operations and daily lives, there will be an accompanying increase in the risks and benefits, both known and unknown, that this technology will bring to companies and their cybersecurity. Businesses’ rapid adoption of AI introduces new risks alongside its benefits to innovation and productivity, suggesting that AI, like any other enterprise risk, needs to be overseen and governed at the board level.

When applied to a company’s cybersecurity program, AI can enhance capabilities in areas like automatic cyber threat detection, alert generation, malware identification, and data protection. AI’s enhanced data analysis capabilities can significantly reduce the signal-to-noise ratio among log data coming into the security operations center—reducing false positives and quickly directing the security team’s attention toward the most important and critical threats. AI also has the potential to help predict weaknesses and assist security teams in making changes to prevent the breach in the first place. This capability allows companies to “get left of theft,” thereby making it much harder for the attackers to succeed. Overall, AI, when applied correctly, can be a force multiplier to corporate cybersecurity teams, strengthening a business’s defense systems while increasing efficiency, productivity, and profit in business operations.

However, despite its promise, as with all new technology, implementing AI brings new risks. A key risk is the lack of widespread awareness of AI’s potential dangers, as only a few leaders possess the necessary experience and education to understand the societal, organization, and individual risks. The entirety of AI risks and benefits has yet to be discovered, highlighting the imperative for continuous board education about the potential unknown future organizational and cybersecurity consequences this technology could bring.

While AI can improve corporate cybersecurity performance, AI also provides new tools to threat actors. AI lowers the barrier to entry for cybercriminals by reducing the technical know-how required to launch cyberattacks and turbocharging the evolution of existing tactics, techniques, and procedures. Criminals and nation-state adversaries are already exploring the use of AI tools to enhance their tradecraft, improve the veracity and efficacy of their attack campaigns, and train less experienced workers to combat companies and governments using AI for defense.

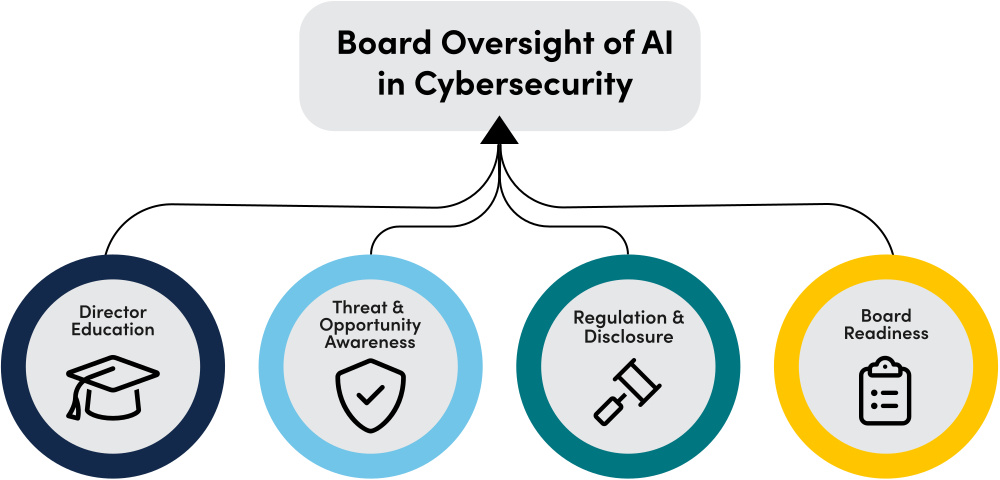

AI in Cybersecurity Oversight Imperatives

Source: National Association of Corporate Directors

Protecting the company’s workforce from AI’s harms and opportunities for misuse represents another risk area. Many companies' greatest asset and product is their people. But how are they to leverage AI in a responsible, ethical, and compliant manner that delivers strategic benefits but does not simultaneously expose the organization to risk levels above appropriate thresholds? Boards should ensure that their company’s leadership understands how AI is in use in their companies, adopts a governance and security framework that accounts for AI’s unique risks, develops use cases aligned with the company’s purpose, values, and governance principles, and communicates the responsible use of AI within their products and services. This transparency is essential to establishing and maintaining stakeholder and shareholder trust.

Imperative for Boards

Boards must educate themselves about AI’s implications within cybersecurity and operations. Understanding and awareness of AI’s technical advancements, new risks, and regulatory implications will be necessary for effective risk oversight. Boards cannot allow management to fall into the trap of either overlooking potential perils or overestimating an organization’s risk-mitigation capabilities. In order to fully realize the benefits of AI in their cybersecurity departments and their overall business, directors must be aware of what artificial intelligence is, its benefits, and the potential consequences or risks it can bring to their organizations.

Boards are uniquely positioned to play an important role in ensuring management provides a safe and responsible use of AI to manage cyber risk across the organization, as described in detail in Principles Four and Five of the NACD-ISA 2023 Director’s Handbook on Cyber-Risk Oversight. This report is a supplement to that handbook to educate directors about this critically important topic. By educating themselves in the various types of AI, the current applications of AI in cybersecurity departments, and regulatory and disclosure implications, directors and boards will better understand the intersection of AI and cybersecurity and be better positioned to provide oversight of this strategically important technology.